1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

|

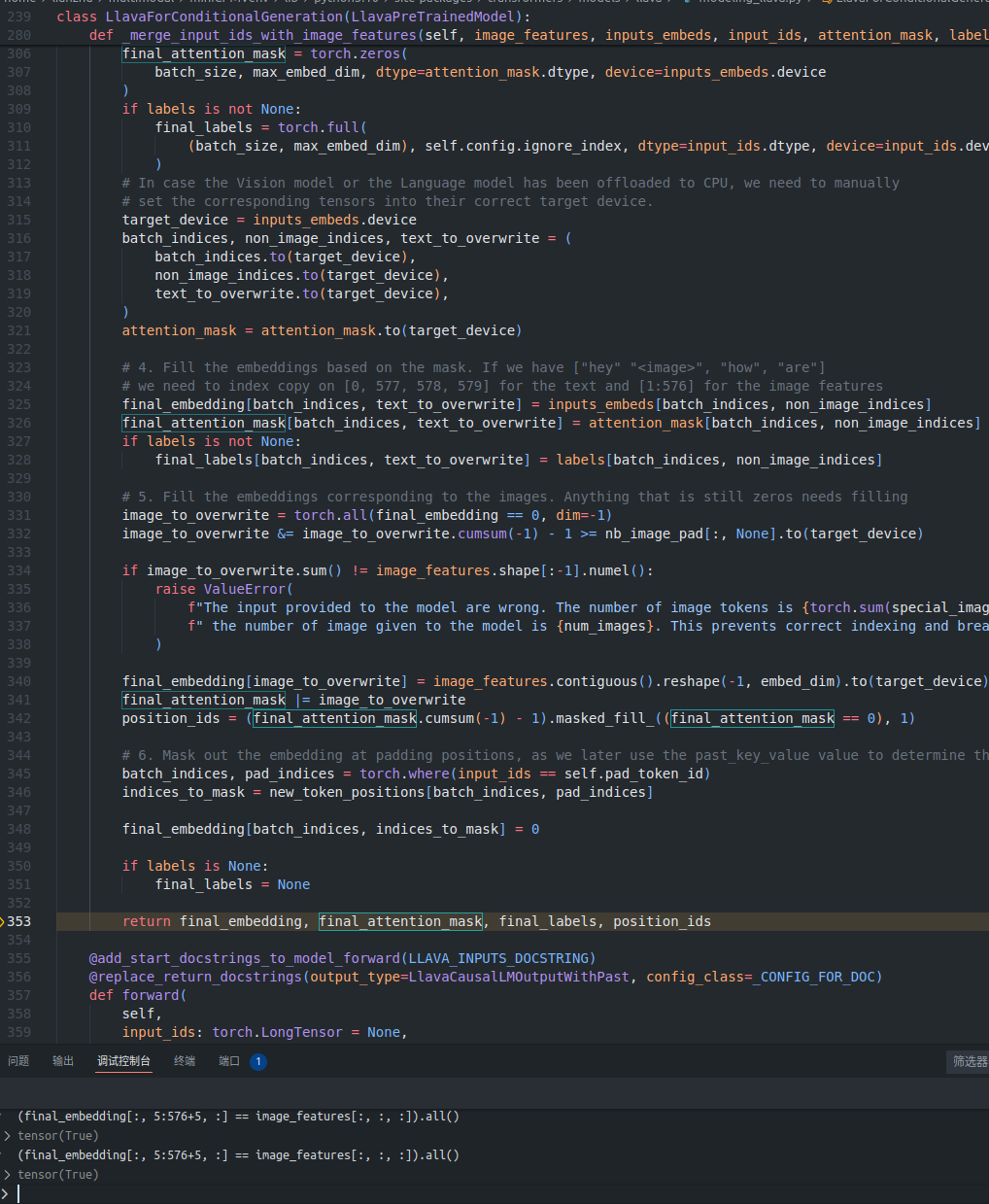

LlavaForConditionalGeneration(

(vision_tower): CLIPVisionModel(

(vision_model): CLIPVisionTransformer(

(embeddings): CLIPVisionEmbeddings(

(patch_embedding): Conv2d(3, 1024, kernel_size=(14, 14), stride=(14, 14), bias=False)

(position_embedding): Embedding(577, 1024)

)

(pre_layrnorm): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(encoder): CLIPEncoder(

(layers): ModuleList(

(0-23): 24 x CLIPEncoderLayer(

(self_attn): CLIPAttention(

(k_proj): Linear(in_features=1024, out_features=1024, bias=True)

(v_proj): Linear(in_features=1024, out_features=1024, bias=True)

(q_proj): Linear(in_features=1024, out_features=1024, bias=True)

(out_proj): Linear(in_features=1024, out_features=1024, bias=True)

)

(layer_norm1): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(mlp): CLIPMLP(

(activation_fn): QuickGELUActivation()

(fc1): Linear(in_features=1024, out_features=4096, bias=True)

(fc2): Linear(in_features=4096, out_features=1024, bias=True)

)

(layer_norm2): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

)

)

)

(post_layernorm): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

)

)

(multi_modal_projector): LlavaMultiModalProjector(

(linear_1): Linear(in_features=1024, out_features=4096, bias=True)

(act): GELUActivation()

(linear_2): Linear(in_features=4096, out_features=4096, bias=True)

)

(language_model): LlamaForCausalLM(

(model): LlamaModel(

(embed_tokens): Embedding(32064, 4096)

(layers): ModuleList(

(0-31): 32 x LlamaDecoderLayer(

(self_attn): LlamaSdpaAttention(

(q_proj): Linear(in_features=4096, out_features=4096, bias=False)

(k_proj): Linear(in_features=4096, out_features=4096, bias=False)

(v_proj): Linear(in_features=4096, out_features=4096, bias=False)

(o_proj): Linear(in_features=4096, out_features=4096, bias=False)

(rotary_emb): LlamaRotaryEmbedding()

)

(mlp): LlamaMLP(

(gate_proj): Linear(in_features=4096, out_features=11008, bias=False)

(up_proj): Linear(in_features=4096, out_features=11008, bias=False)

(down_proj): Linear(in_features=11008, out_features=4096, bias=False)

(act_fn): SiLU()

)

(input_layernorm): LlamaRMSNorm()

(post_attention_layernorm): LlamaRMSNorm()

)

)

(norm): LlamaRMSNorm()

)

(lm_head): Linear(in_features=4096, out_features=32064, bias=False)

)

)

|